Some editions of Dragon NaturallySpeaking (including Medical) support a Roaming User Profile feature. With this, you can store your voice profile on a server and download it to/upload it from computers on which you dictate. Like most aspects of Dragon NaturallySpeaking, it’s unnecessarily complex and flaky, but I got it to work in my distinctly non-enterprise environment a few weeks ago. For anyone else in a similar situation who wants their training, custom dictionaries and commands to follow them, I hope the following is helpful.

I assume here you have an existing local user profile to migrate. Dragon NaturallySpeaking’s WebDAV client is inefficient and includes many configuration options of dubious utility, but does (eventually) work. For WebDAV on IIS (or SMB), the instructions in the administration manual appear relatively complete. The manual mentions Apache compatibility but includes no setup information, nor could I find any elsewhere on the Internet. So, my server examples use WebDAV with the Apache HTTP server 2.4.x.

Setting up a WebDAV server

It's 2016 and you should be using SSL/TLS by now. Mozilla has a nice SSL configuration generator; this is the configuration I'm using. The newest protocol Dragon NaturallySpeaking 12 claims it supports is TLSv1, so the "modern" configuration likely won't work.

My configuration follows. Authentication is however you want to set it up; I use digest auth behind SSL/TLS. Obviously, replace my file paths as appropriate. The Dragon NaturallySpeaking WebDAV client configuration includes options to follow redirects, but they don't work properly and aren't compatible with connection keep-alive. Thankfully, Apache has a workaround for such brokenness (redirect-carefully). The client expects infinite-depth requests to work, hence DavDepthInfinity on.

DavLockDB /var/www/sabi.net/webdav/dav_lock.db

<Directory /var/www/sabi.net/public/dragon>

Dav On

DavDepthInfinity on

AuthType Digest

AuthName dragon

AuthUserFile /var/www/sabi.net/etc/digest.passwd

Require valid-user

SSLRequireSSL

# Redirects don't work. At all.

BrowserMatch "Nuance component" redirect-carefully

RewriteEngine off

</Directory>

Make sure the directory is writable by the Web server user; mine looks like this:

drwxrwsr-x 4 nriley www-nriley 4.0K Apr 23 11:49 /var/www/sabi.net/public/dragon/

Setting up the WebDAV client

Documentation is here. Follow the instructions under Enable the Roaming User Profile feature and Set location of Master Roaming User Profiles.

In HTTP Settings, specify your username, password and an Authentication Type as appropriate. Under Connection, click Never for Follow Redirects and check the Keep Connection Alive box. I didn't change the Timeouts from the defaults.

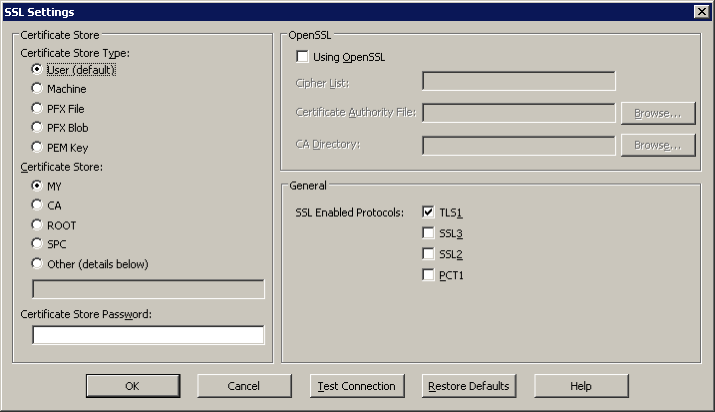

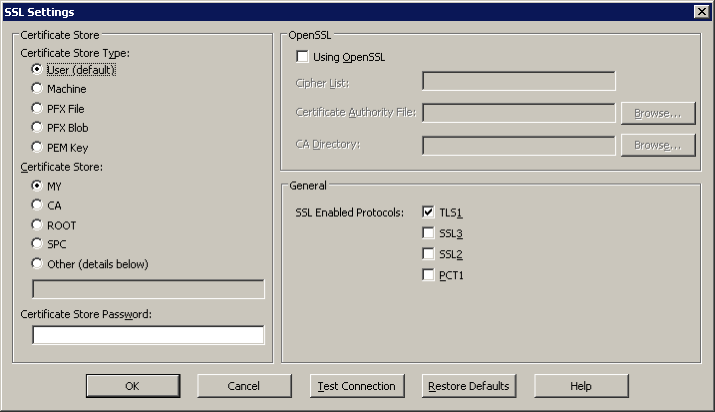

My SSL Settings are as follows:

I haven't actually tested if my server certificate is verified, but I do know enough not to check Using OpenSSL in an application that hasn't been updated in years.

Click Test Connection. If it fails, check your Apache logs; client-side feedback ranges from unhelpful to misleading. You'll notice that every single request is initially tried unauthenticated — I couldn't figure out a way to stop this from happening. Once I was confident that authentication was working, I filtered out these duplicate requests. Here’s the whole test:

% tail -fn 0 /var/www/sabi.net/logs/ssl.*~*.gz | grep nriley

nriley [23/Apr/2016:19:18:15 +0000] "PROPFIND /dragon HTTP/1.1" 207 1210 "-" "Nuance component"

nriley [23/Apr/2016:19:18:15 +0000] "DELETE /dragon/tst.tmp HTTP/1.1" 404 522 "-" "Nuance component"

nriley [23/Apr/2016:19:18:15 +0000] "PUT /dragon/tst.tmp HTTP/1.1" 201 442 "-" "Nuance component"

nriley [23/Apr/2016:19:18:15 +0000] "DELETE /dragon/TempDir HTTP/1.1" 404 522 "-" "Nuance component"

nriley [23/Apr/2016:19:18:16 +0000] "MKCOL /dragon/TempDir HTTP/1.1" 201 442 "-" "Nuance component"

nriley [23/Apr/2016:19:18:16 +0000] "DELETE /dragon/TempDir/tst1.tmp HTTP/1.1" 404 522 "-" "Nuance component"

nriley [23/Apr/2016:19:18:16 +0000] "PROPFIND /dragon HTTP/1.1" 207 6554 "-" "Nuance component"

nriley [23/Apr/2016:19:18:16 +0000] "PUT /dragon/TempDir/tst1.tmp HTTP/1.1" 201 458 "-" "Nuance component"

nriley [23/Apr/2016:19:18:16 +0000] "DELETE /dragon/TempDir/tst2.tmp HTTP/1.1" 404 522 "-" "Nuance component"

nriley [23/Apr/2016:19:18:16 +0000] "PROPFIND /dragon HTTP/1.1" 207 6554 "-" "Nuance component"

nriley [23/Apr/2016:19:18:16 +0000] "PUT /dragon/TempDir/tst2.tmp HTTP/1.1" 201 458 "-" "Nuance component"

nriley [23/Apr/2016:19:18:17 +0000] "PROPFIND /dragon/TempDir HTTP/1.1" 207 2858 "-" "Nuance component"

nriley [23/Apr/2016:19:18:17 +0000] "GET /dragon/TempDir/tst1.tmp HTTP/1.1" 200 341 "-" "Nuance component"

nriley [23/Apr/2016:19:18:17 +0000] "PROPFIND /dragon/TempDir/ HTTP/1.1" 207 1162 "-" "Nuance component"

nriley [23/Apr/2016:19:18:17 +0000] "MOVE /dragon/TempDir/tst1.tmp HTTP/1.1" 201 458 "-" "Nuance component"

nriley [23/Apr/2016:19:18:17 +0000] "MOVE /dragon/TempDir/ HTTP/1.1" 201 442 "-" "Nuance component"

nriley [23/Apr/2016:19:18:17 +0000] "COPY /dragon/newTempDir HTTP/1.1" 201 442 "-" "Nuance component"

nriley [23/Apr/2016:19:18:18 +0000] "DELETE /dragon/tst.tmp HTTP/1.1" 204 261 "-" "Nuance component"

nriley [23/Apr/2016:19:18:18 +0000] "DELETE /dragon/newTempDir HTTP/1.1" 204 293 "-" "Nuance component"

nriley [23/Apr/2016:19:18:18 +0000] "DELETE /dragon/newTempDir2 HTTP/1.1" 204 293 "-" "Nuance component"

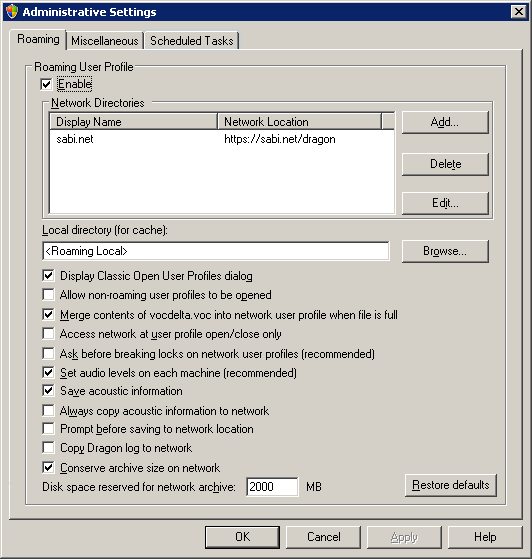

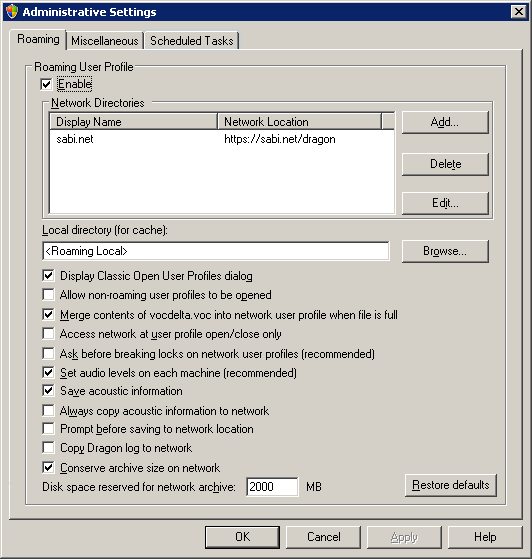

Roaming options

Nuance documentation is here and does a reasonably good job of explaining the options; I recommend you read it prior to my comments below. Here's how I have the roaming Administrative Settings configured:

If you’re going to be the only user, check Display Classic Open User Profiles dialog. This displays a flat versus a hierarchical list of users and dictation sources. Every time you click on anything in this dialog, be prepared for a long synchronous wait for server access. By disabling the hierarchy, you eliminate the wait while expanding your user. (If you only have one user and dictation source, you may not see this dialog at all.)

Allow non-Roaming User Profiles to be opened will need to be checked while you are migrating your user profile to a roaming profile, but can be unchecked afterward.

Merge contents of vocdelta.dat into network User Profile when file is full involves a 500K file; in a WAN environment with reasonably fast links, latency is likely to outweigh any time savings, so I kept this checked.

I unchecked Access network at User Profile open/close only because I keep my profiles open for days at a time and have an Internet connection available at all times. If your usage pattern is different, you may select otherwise.

Despite documentation suggesting that Ask before breaking locks on network User Profiles does not apply to profiles accessed through HTTP, I was asked to break a lock nearly every time I opened my profile until I unchecked it. There might be some server configuration that will let this be checked, but I’m unaware of it.

Always copy acoustic information to network and Conserve archive size on network are somewhat related. How you decide to limit/copy acoustic information really depends on your network performance, patience and desired strategy for propagating corrections and optimizing your profile.

Converting your profile

Again, there's official documentation which I won't repeat. There's no progress bar, just an unresponsive interface during migration; watch the server logs or your favorite network monitoring utility if you get nervous.

If you’ve been using Dragon NaturallySpeaking for some time, you may think of your profile as a large, unwieldy multi-gigabyte entity. Much of this is backups and audio data that aren’t strictly necessary — and you’ll notice that the server profile is much smaller because it omits them. My local profiles (compressed!) on two machines prior to migration were 1.4 and 1.1 GB; corresponding sizes on the server are 437 and 430 MB. ~320 MB of each is (primarily) audio in the voice_container subdirectory.

Once you're comfortable your roaming profile works, don't forget to delete your local profile(s).

Pitfalls

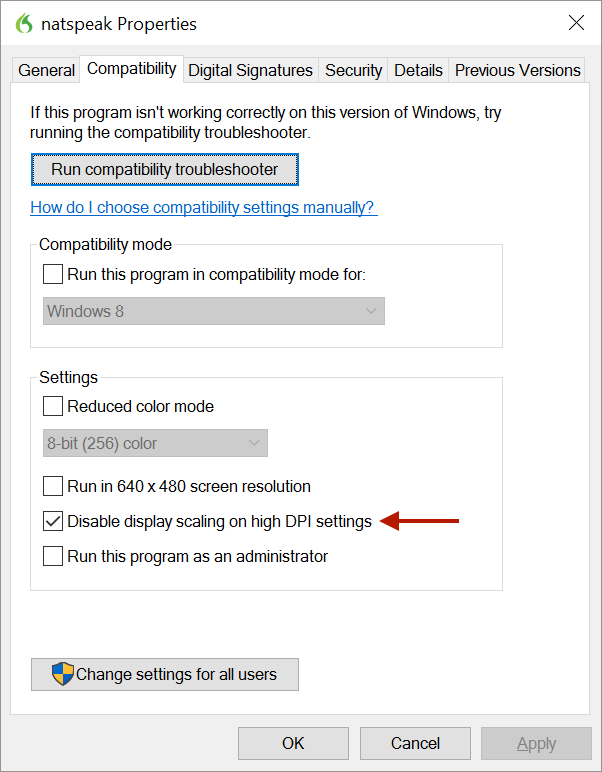

Much of the information here is out of date but an important and still-relevant sentence is "When using a roaming user profile, backup files cannot be generated in any location". The downside of backups not being written to the roaming profile is that if your profile becomes corrupted (which just happened for me today — I set up Dragon Medical Practice Edition on a new Windows 10 installation and subsequently DMPE crashed every time I opened the profile from my Windows 7 VMs) you’ll have to rely on your server backups. If you don’t have server backups — go fix that.

The Language and Acoustic Optimizers don't run on a roaming profile; they idea is that you run them server-side. I plan on seeing how well they work on a fast network by remotely mounting the WebDAV share, but haven't had a chance to do this yet.

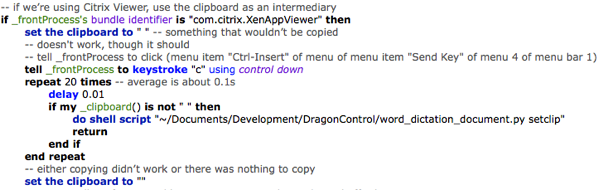

Dragon NaturallySpeaking startup and shutdown obviously takes longer when the network is involved. You can automate opening a profile with a command-line argument to natspeak.exe, but you can't specify a dictation source (if you have more than one) without relying on AutoHotKey or similar. Thanks to various VMware Fusion and/or OS X bugs I already have to babysit dictation startup, so one more click to select a profile hasn't been a great additional hardship.

For more

My other dictation-related blog posts are in the Dictation category, if you're interested. Right now all my dictation effort is targeted at prose, but at some point I plan to investigate VoiceCode — which is currently in the process of being rewritten.

9:55 AM

9:55 AM