I dictate to my computer a lot. It helps me write faster and saves my hands for other pursuits.

In the last few years, dictation, both of the local and network-hosted variety, has improved to the point that this choice is no longer an infuriating time sink. Coding, versus writing prose, via dictation is still in its infancy and I continue to anxiously await Tavis Rudd’s release of the dictation system he demoed at several conferences last year (warning: videos may be NSFW thanks to some synthesized expletives).

In a conversation on Twitter earlier this week I noted that, despite considerable enhancements in the past few years, dictation on OS X doesn’t get discussed much — hence this post.

If you’re going to be playing with dictation, make sure you have a decent headset, properly positioned. Wired, noise-canceling USB headsets are not expensive, and even though Apple’s been adding microphones and improving noise canceling on their Macs recently, you still do better with a headset. If the dictation system you’re using doesn’t include an audio setup step, just record and play back some of your own speech to make sure it’s audible and relatively free of background noise.

On OS X, you have 4 choices for dictation:

Networked dictation

Networked dictation was introduced in OS X 10.8 Mountain Lion. It’s similar to the dictation service on iOS, and benefits from its use by Siri. I appreciate its well executed incorporation into the OS: you can dictate effectively into nearly every text field everywhere; you can easily start and stop dictation from the keyboard; dictation alternatives (blue dotted underline) are part of the Cocoa text system, and dictated text nicely integrates its capitalization and sentence structure with the surrounding material. The software on the other end of the network has a huge vocabulary, including medical terms.

Usability disadvantages with this method of dictation as currently implemented include:

- no trainability (though, given it’s designed to be a speaker-independent system, this is less of an issue)

- no real-time feedback: dictation happens in 1-minute batches

- no editing by voice

- no error handling whatsoever. If the server fails to respond or recognize your words, up to a minute of spoken text is lost. This is somewhat understandable on iOS, but given the essentially infinite resources of OS X in comparison, it’s not defensible there. Ideally, I’d expect audio to be saved as a text attachment for deferred recognition, much like the Newton did with ink text.

There are also privacy issues, of course. I’m careful not to use this service to dictate anything sensitive, regardless of the promised or actual handling of my data.

“Enhanced Dictation”

OS X Mavericks (10.9) introduces “Enhanced Dictation”, a locally hosted version of Nuance’s recognizer. It’s not installed and off by default; you can turn it on in System Preferences. Like OS X’s networked dictation, Enhanced Dictation is not trainable and doesn’t let you edit by voice, but it does let you mix keyboard/mouse editing and dictation. While it does provide the feedback expected of a local recognizer and does away with the one minute dictation limitation, it’s the only one of these options I find unusable in practice.

Enhanced Dictation’s omissions of training and editing likely protect sales of the Dragon Mac products (discussed below). The bigger issue is that this seems fundamentally a speaker-dependent system without a method of training, resulting in frequent dictation errors you can’t fix. The vocabulary seems smaller than the networked alternative, though because of its frustratingly high error rate, I haven’t done a lot of testing. It also uses a lot of memory.

Dragon products

Nuance offers Dragon Dictate for Mac, MacSpeech Scribe and Dragon Dictate Medical. The Mac-specific components of these products and their predecessors have always been buggy and flaky. My experience with the support and sales surrounding them have ranged from incompetence to sleaziness. I have purchased several versions and upgrades of these products going back to the original pre-OS X, Philips recognizer-based versions, but I’m not going to keep supporting software that is this poorly developed, sold and supported.

Windows in a virtual machine

Nuance’s Windows dictation products (Dragon NaturallySpeaking and Medical/Legal) are better than their Mac equivalents, though that’s not saying a lot. The UI is a scattered, slowly-evolving mess; true interaction between keyboard/mouse and voice editing is limited to individual versions of specific applications, and the medical product is expensive (upgrades are $500 on sale).

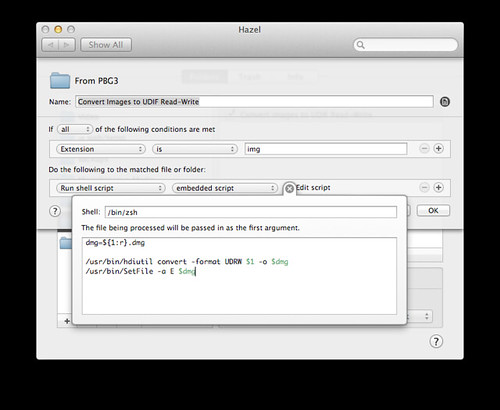

The main reason I dictate into Windows is the ecosystem surrounding the Dragon products there. There are quite a few abandoned research projects and other near-abandonware to contend with, but it’s possible with some effort to construct a productive system. What I’ve done thus far is nowhere near what Tavis Rudd did, but it works for me. Natlink is a Python framework for building recognition systems, with several macro languages/frameworks built on top including Unimacro, Vocola and Dragonfly (the basis of Tavis’s system).

Microsoft also bundles speech recognition with Windows these days; I’ve used it very little, but it does work with Dragonfly.

My choices

I use OS X’s networked dictation for brief passages, and a Windows 7 VM for anything longer, like this post. I recently upgraded my Windows environment to the current Dragon Medical 2 (equivalent to NaturallySpeaking 12) and Word 2013. More on that setup is coming in my next post.

11:57 AM

11:57 AM

4 Comments

4 Comments